Developing applications with Large Language Models (LLMs) requires robust observability, monitoring, and debugging. While PromptWatch offers a solid foundation for managing LLM operations, its feature set might not align perfectly with every team’s specific workflow, technical stack, or scaling requirements. Whether you’re hitting limitations, seeking more advanced analytics, or simply exploring the market, finding the right tool is critical for optimizing performance, controlling costs, and ensuring a reliable user experience. This guide provides a comprehensive breakdown of the leading PromptWatch alternatives, helping you navigate a crowded and complex market.

We will dive deep into seven distinct platforms, moving beyond surface-level feature lists to offer a practical analysis of each tool. For every alternative, you will find a detailed overview covering core functionalities, ideal user profiles, and transparent pricing information. We’ll also provide an honest assessment of pros and cons based on real-world use cases, giving you the insights needed to make an informed decision. As you begin to explore the landscape of LLM observability and support tools, platforms like the supportgpt platform are also contributing to the evolving ecosystem.

This resource is structured to save you time and effort. Each entry includes screenshots for a clear visual reference, direct links to get started, and practical tips for potential migration. Our goal is to equip your team, whether you’re a B2B SaaS startup or a digital marketing agency, with the necessary information to select the LLM observability platform that best fits your project’s unique demands. By the end, you’ll have a clear understanding of your options and be ready to choose the most effective tool to build, monitor, and scale your AI-powered applications.

1. Airefs

Airefs positions itself as a specialized platform for marketing and content teams, diverging from engineering-centric observability tools. It’s built for brands that want to not only track their presence in AI-generated answers but actively influence it. This makes it one of the more action-oriented PromptWatch alternatives available, focusing on what marketers call AI Engine Optimization (AEO). The platform connects prompt monitoring directly to tangible tasks, helping you see where your brand is mentioned and providing a clear path to increase those mentions.

The core workflow revolves around tracking brand citations across major AI models like ChatGPT and Google AI Overviews. Airefs then identifies the specific sources (articles, reviews, Reddit threads) that these models pull from. This “source intelligence” is its standout feature. Instead of just knowing you were mentioned, you learn why, enabling your team to update on-site content or participate in key off-site discussions to improve visibility.

Key Features & Ideal User

- AI Search Analytics: Measures mentions, citations, impressions, and clicks generated from AI answers.

- Source Intelligence: Traces AI answers back to the original source URLs, including Reddit threads and forums.

- Action Queues: Creates a prioritized task list for on-site content edits and off-site community engagement.

- Competitor Benchmarking: Tracks your AI visibility against key competitors for share-of-voice analysis.

Best for: In-house marketing teams, SEO agencies, and growth marketers focused on improving their brand’s visibility and getting recommended in AI search results. If your goal is to connect prompt performance to marketing ROI, Airefs is purpose-built for that.

Pricing, Pros & Cons

Airefs doesn’t list public pricing tiers, instead opting for custom packages based on usage and needs. A “done-for-you” agency service is also available for teams needing execution support.

| Pros | Cons |

|---|---|

| Connects monitoring directly to marketing actions (AEO/GEO). | As an early product, per-engine coverage depth may be evolving. |

| Strong focus on actionable insights, especially from Reddit and forums. | Pricing is not transparent; requires a demo to get a quote. |

| Offers an agency service for teams without the internal capacity to execute. | Primarily focused on marketing use cases, less so on engineering observability. |

This platform is a strong contender for those looking to operationalize their AEO strategy. To see how it fits into the broader landscape, you can explore a comparison of the best AEO tools for a deeper dive.

2. LangSmith (by LangChain)

LangSmith is a powerful, engineering-first observability platform designed for developers building and deploying LLM applications and agents. Unlike marketing-focused tools, it provides deep instrumentation and tracing capabilities, making it an ideal PromptWatch alternative for teams needing to debug, evaluate, and monitor complex agentic workflows. The platform is built by the creators of LangChain, ensuring tight integration with one of the most popular LLM development frameworks.

The core value of LangSmith lies in its detailed tracing, which allows developers to visualize every step of an agent’s execution. This granular insight is critical for diagnosing errors, optimizing performance, and understanding cost drivers. It also features robust evaluation tools for running both offline regression tests and online quality monitoring, essentially creating a CI/CD pipeline for your prompts and agents. This focus on developer-centric workflows makes it a standout for technical teams.

Key Features & Ideal User

- LLM Tracing & Debugging: Provides a comprehensive view of agent runs, tool calls, and model inputs/outputs.

- Monitoring & Analytics: Tracks quality metrics, cost, latency, and token usage across applications.

- Testing & Evaluation: Supports online and offline evaluations with datasets and human annotation queues.

- Flexible Deployment: Offers cloud, hybrid, or self-hosted options with enterprise-grade controls and SSO.

Best for: Engineering teams, AI/ML developers, and companies building sophisticated LLM-powered applications. If your primary need is deep technical observability and establishing a rigorous CI/CD process for your agents, LangSmith is designed for you.

Pricing, Pros & Cons

LangSmith offers a free developer tier for individuals and small projects. Paid plans are usage-based with multiple meters for traces, runs, and uptime, which provides flexibility but can be complex to forecast.

| Pros | Cons |

|---|---|

| Deep integrations with LangChain and other modern agent stacks. | Pricing model with multiple meters can be complex to manage and predict. |

| Flexible hosting options, including self-hosted for data privacy. | Best suited for teams with engineering resources to instrument and operate. |

| Strong free developer tier and a dedicated plan for startups. | Less focused on marketing use cases like brand visibility or AEO. |

For teams building production-grade agents, LangSmith offers the essential tools for ensuring reliability and performance. This developer-centric approach contrasts with platforms focused on search marketing intelligence, which prioritize brand visibility in AI answers.

3. Langfuse

Langfuse positions itself as a developer-first, open-source LLM observability platform. It provides a robust, self-hostable solution that appeals to engineering teams wanting full data control, alongside a polished and scalable cloud offering. This dual approach makes it one of the most flexible PromptWatch alternatives for teams that prioritize both technical depth and ease of use. It focuses on the core engineering problems of tracing, debugging, and evaluating LLM applications from development to production.

The platform’s strength lies in its comprehensive feature set, combining detailed tracing of LLM calls with integrated prompt management and model-based evaluation frameworks. Users can track costs, latency, and user feedback, then create datasets from production traces to fine-tune models or run regression tests. The open-source nature (MIT license) and straightforward migration path from self-hosted to cloud ensure that startups can begin for free and scale without getting locked into a proprietary system.

Key Features & Ideal User

- Detailed Tracing: Captures every step of an LLM chain, including costs, latency, and token counts.

- Prompt & Dataset Management: Version control prompts and create evaluation datasets directly from production data.

- Integrated Evaluations: Score LLM outputs against predefined metrics or other models to ensure quality.

- Open-Source & Self-Hosting: Offers a one-click, self-hostable version for full data privacy and control.

Best for: Engineering teams, AI/ML developers, and startups looking for a transparent, open-source solution to debug, monitor, and improve their LLM applications. If you value data ownership and a clear path from a free, self-hosted setup to a managed cloud service, Langfuse is an excellent choice.

Pricing, Pros & Cons

Langfuse offers a generous free tier on its cloud platform and a completely free open-source version. Paid plans are usage-based, with clear pricing and discounts available for startups and educational institutions.

| Pros | Cons |

|---|---|

| Robust free and open-source offering provides an easy entry point. | Requires engineering effort to instrument applications effectively. |

| Clear, low-cost pricing with a straightforward upgrade path. | Some advanced enterprise features are gated to higher-tier plans. |

| Strong community adoption and transparent development. | Primarily an engineering tool; less focused on marketing analytics. |

The platform’s commitment to open-source principles makes it a trustworthy foundation for building reliable AI products. You can get started by visiting the official Langfuse website.

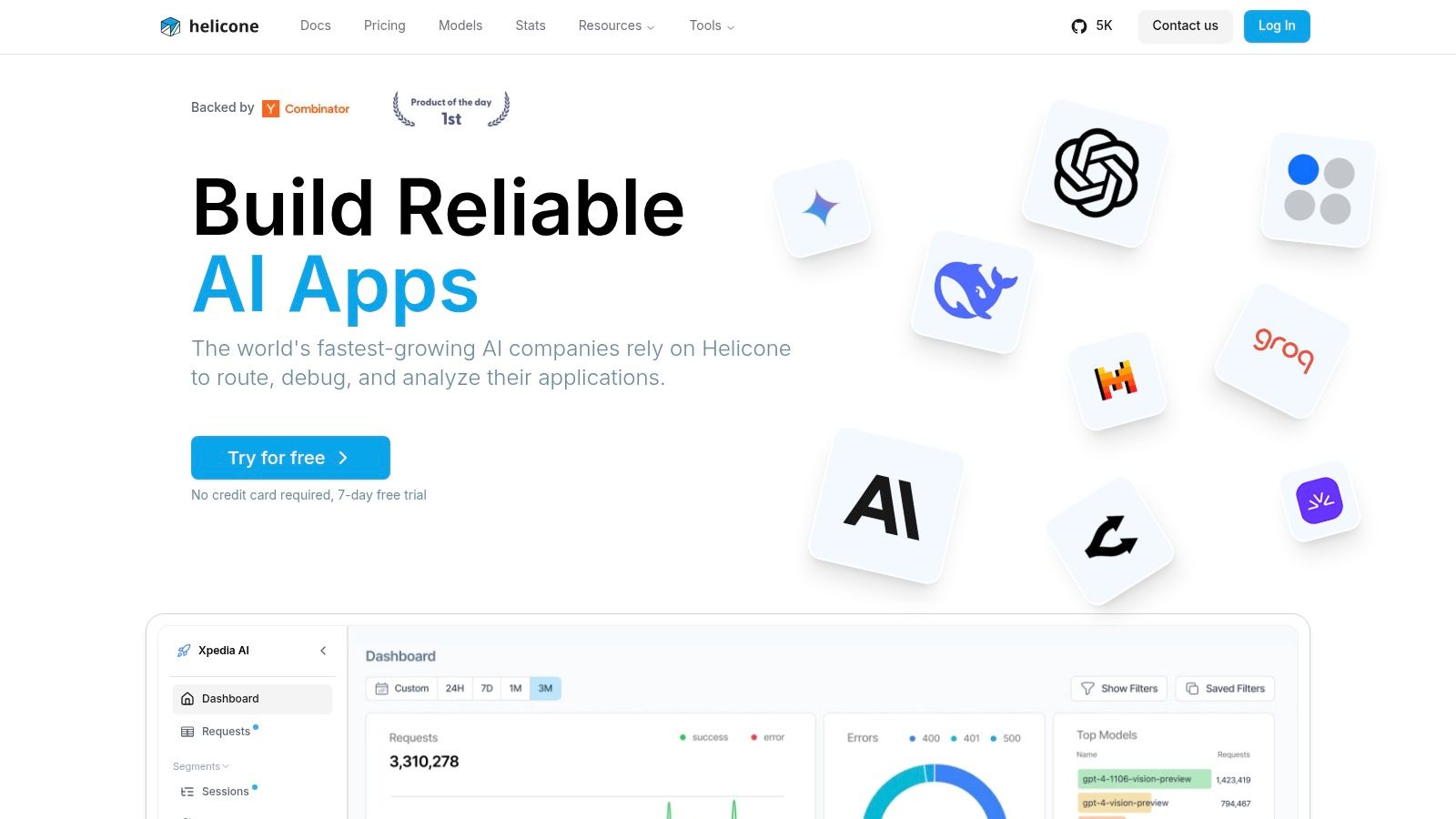

4. Helicone

Helicone integrates an AI gateway with an observability platform, creating a unified solution for managing and monitoring LLM applications. Unlike tools that only track requests, Helicone acts as a proxy, giving engineering teams control over API calls with features like automatic retries, fallbacks, and caching. This architecture makes it a powerful PromptWatch alternative for teams prioritizing reliability, cost optimization, and centralized governance alongside traditional monitoring.

The platform’s dual focus is its key differentiator. The gateway standardizes access to any model provider, while the observability layer logs every request, cost, and latency metric. This allows you to debug issues using session views, query logs with Helicone Query Language (HQL), and set up alerts for anomalies. For development, Helicone includes a prompt playground and evaluation tools to test and score prompt versions before deploying them.

Key Features & Ideal User

- Universal AI Gateway: Centralizes API calls with caching, rate limiting, and automatic provider fallbacks.

- Observability Dashboards: Provides detailed analytics on usage, costs, latency, and user feedback.

- Prompt Management: Features a playground for experimentation and tools for evaluating prompt templates against datasets.

- Enterprise-Grade Security: Offers SOC-2 and HIPAA compliance options, plus an on-premise deployment model.

Best for: Engineering teams and CTOs who need a robust, all-in-one solution for managing API reliability, controlling costs, and monitoring performance. It’s particularly well-suited for organizations in regulated industries or those that require the flexibility of on-premise hosting.

Pricing, Pros & Cons

Helicone offers a generous free Hobby tier for up to 100,000 requests per month. Paid plans scale from there, starting with the Pro plan at $75/month for 500,000 requests and an Enterprise tier with custom pricing.

| Pros | Cons |

|---|---|

| Combines gateway reliability with analytics in one product. | Full capabilities require routing all calls through its gateway layer. |

| Generous free tier and transparent, scalable pricing. | Some advanced analytics features are limited on lower-tier plans. |

| Enterprise compliance options (SOC-2, HIPAA) and on-prem availability. | Might be overly complex for teams only needing basic logging. |

For developers seeking a platform that merges operational control with deep analytics, Helicone offers a compelling and secure package. You can learn more and get started at helicone.ai.

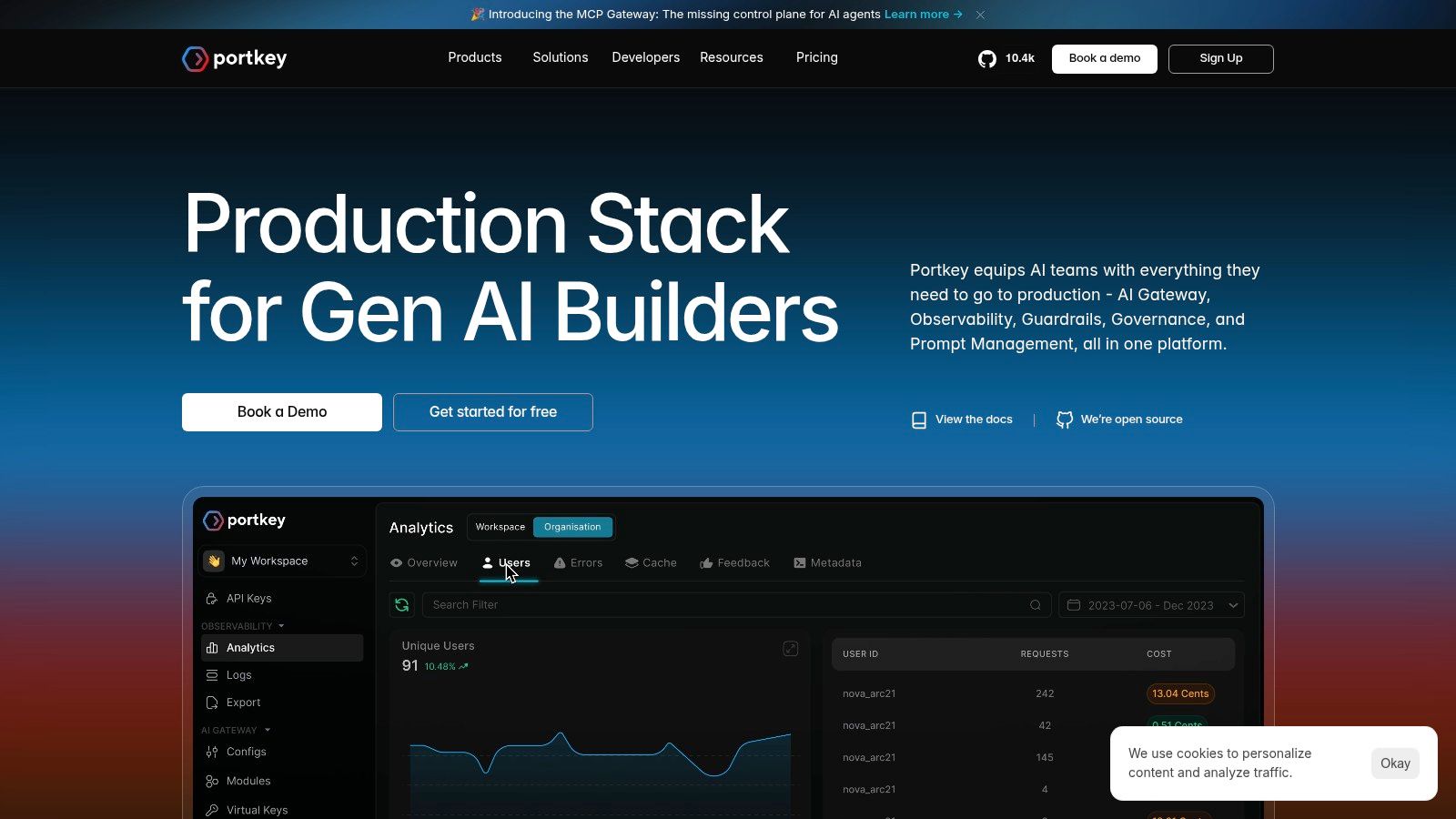

5. Portkey

Portkey acts as a comprehensive AI gateway and control plane designed for production environments. It centralizes operations for teams working with multiple LLMs, providing a universal API that handles routing, load balancing, and fallbacks automatically. This makes it a powerful PromptWatch alternative for engineering teams looking to standardize their AI stack, improve reliability, and gain deep observability into every API call. The platform combines infrastructure management with prompt engineering and governance, creating a unified solution for scaling AI applications.

The core strength of Portkey lies in its gateway functionality. By routing all LLM traffic through its universal API key, developers can implement semantic caching to reduce costs and latency, set up automatic retries for failed requests, and enforce budget or rate limits. Its observability suite includes detailed logging, tracing, and feedback capture, while its prompt management tools offer versioning and a collaborative playground. This integrated approach simplifies the complex task of managing a multi-provider LLM strategy from a single point of control.

Key Features & Ideal User

- Universal AI Gateway: Manages API keys, load-balancing, fallbacks, and retries across multiple LLM providers.

- Observability & Governance: Provides comprehensive logs, traces, alerts, and tools for setting budgets and rate limits.

- Prompt Management: Features prompt templates, versioning, A/B testing, and an interactive playground for refinement.

- Enterprise-Ready: Supports RBAC, SSO, and options for VPC or private cloud hosting for enhanced security.

Best for: Engineering teams and developers building production-grade AI applications that rely on multiple LLMs. It is ideal for those who need a single platform to manage infrastructure, monitor performance, and control costs without building a custom solution.

Pricing, Pros & Cons

Portkey offers a generous free tier, with paid plans starting at $25/month for the “Growth” tier and custom pricing for enterprise needs.

| Pros | Cons |

|---|---|

| Clear entry-level pricing and a substantial free tier. | Best value is realized when all LLM traffic is routed via the gateway. |

| Combines gateway, observability, and guardrails in one tool. | Some advanced guardrails and enterprise features require higher tiers. |

| Practical log retention and data export options. | May be overly complex for teams only needing simple prompt logging. |

For teams needing to productionize their LLM stack, Portkey provides the necessary infrastructure and controls. You can learn more and get started on the Portkey website.

6. Traceloop

Traceloop enters the LLM observability space with a strong emphasis on open standards and flexibility, making it a powerful choice for engineering teams that prioritize control and integration. It provides comprehensive observability for LLM applications by shipping OpenLLMetry, an open-source (Apache-2.0) standard for instrumenting LLM calls across various providers. This positions Traceloop as one of the most adaptable PromptWatch alternatives for teams wanting to avoid vendor lock-in and route their data to preferred observability back-ends like Datadog or Grafana.

The platform is designed to give developers deep visibility into their application’s performance through detailed traces and spans for both LLM and vector database interactions. It integrates directly into the CI/CD pipeline, allowing for continuous evaluation and monitoring. For organizations in regulated industries, Traceloop offers on-premise deployment options and SOC2 support, addressing critical data security and compliance requirements that many cloud-only solutions cannot.

Key Features & Ideal User

- OpenLLMetry Instrumentation: Uses an open-source standard to capture and route observability data, ensuring maximum flexibility.

- Traces & Spans: Provides granular visibility into LLM and vector DB calls to diagnose latency and errors.

- Monitoring & Evaluation Dashboards: Offers pre-built dashboards for performance tracking that integrate with CI/CD workflows.

- On-Prem & Enterprise Ready: Supports on-premise deployment and is SOC2 compliant for high-security environments.

Best for: Engineering and DevOps teams building complex LLM applications, especially within regulated industries like finance or healthcare. It’s also ideal for organizations that have an existing observability stack and want to pipe LLM data into it without being tied to a single vendor.

Pricing, Pros & Cons

Traceloop offers a generous free tier that includes up to 50,000 spans per month with no user seat limits. Custom enterprise plans are available for advanced features, on-premise deployment, and higher usage needs.

| Pros | Cons |

|---|---|

| Open-standard instrumentation (OpenLLMetry) avoids vendor lock-in. | Advanced analytics and features may require upgrading to enterprise plans. |

| Generous free tier makes it accessible for startups and small projects. | Teams may still need a separate gateway for routing and caching. |

| Enterprise-grade on-premise and SOC2 options for regulated organizations. | The focus on engineering observability makes it less suited for marketing teams. |

For developers who value open-source and flexible integrations, Traceloop is a compelling choice. You can learn more and get started on the Traceloop website.

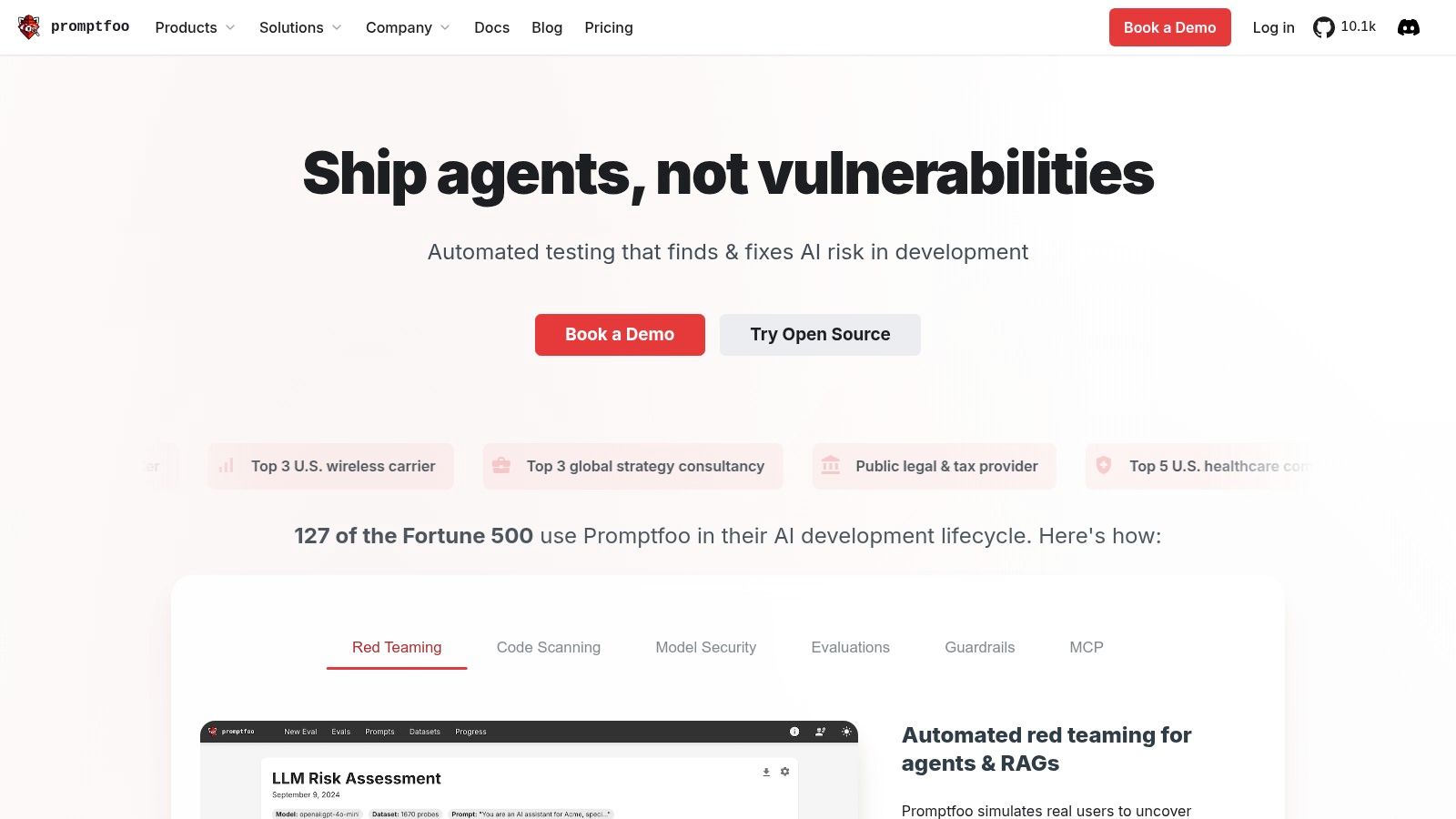

7. Promptfoo

Promptfoo takes a developer-first, open-source approach to LLM quality assurance, positioning itself as a toolchain for rigorous evaluation and security testing. Unlike platforms focused purely on production monitoring, Promptfoo is designed to be integrated directly into your CI/CD pipeline. This makes it a strong PromptWatch alternative for teams that prioritize automated testing for prompt, model, and RAG regressions before they ever reach production. Its core philosophy is to treat prompts and LLM outputs as code, subject to the same testing discipline.

The platform’s standout capability is its unified approach to quality and security. It allows you to run systematic evaluations for functional correctness and quality while simultaneously using red-teaming plugins to detect jailbreaks, biases, and other vulnerabilities. This dual focus ensures that your LLM applications are not only effective but also safe and reliable. By automating these checks, development teams can maintain high standards of performance and security with every new release, making it an essential tool for mission-critical AI systems.

Key Features & Ideal User

- Systematic Evaluations: Test for functional correctness, quality, and regressions in RAG systems.

- AI Red-Teaming & Security: Includes plugins for jailbreak detection, prompt injection, and custom guardrails.

- CI/CD Integration: Designed to plug directly into existing development workflows like GitHub Actions for automated testing.

- Open-Source & Enterprise Options: Offers a generous free community edition alongside on-premise solutions for data isolation.

Best for: Engineering and DevOps teams building LLM applications who need to automate quality and security testing. If your priority is embedding prompt evaluation into your development lifecycle and catching issues before deployment, Promptfoo is an excellent choice.

Pricing, Pros & Cons

Promptfoo offers a free, open-source community edition. Enterprise pricing for on-premise deployments and dedicated support requires contacting their sales team for a custom quote.

| Pros | Cons |

|---|---|

| Combines security and quality testing in a single workflow. | Enterprise pricing and SLAs require contacting sales. |

| Open-source community edition with generous usage allowances. | Requires engineering effort to set up test suites and CI integration. |

| Enterprise support and on-premise options are available. | More focused on pre-production testing than real-time observability. |

This tool is ideal for teams looking to enforce high standards of AI quality and safety. For those focusing on the marketing side of AI, understanding how to rank in ChatGPT can complement a robust technical strategy.

Final Thoughts

Navigating the landscape of LLM observability and prompt engineering platforms can feel overwhelming. While PromptWatch offers a solid foundation, the market is brimming with powerful PromptWatch alternatives, each tailored to solve specific, nuanced challenges in the AI development lifecycle. Moving beyond a one-size-fits-all approach is no longer just an option; it’s a strategic necessity for building robust, scalable, and cost-effective AI applications.

This guide has walked you through a curated selection of tools, from open-source powerhouses like Langfuse and Traceloop to comprehensive gateways like Portkey and Helicone. The key takeaway is that the “best” tool is entirely dependent on your unique context. Your choice will hinge on factors like your technical stack, team size, primary use case, and budget.

Key Takeaways and Your Next Steps

Before you commit to a platform, it’s crucial to distill your specific needs. Are you a solo developer needing a simple, lightweight logger like Helicone, or an enterprise team requiring granular model evaluation and drift detection? Are you deeply embedded in the LangChain ecosystem, making LangSmith a natural fit, or do you need the flexibility of an open-source, self-hosted solution like Langfuse?

Here’s a simplified decision-making framework to guide your next steps:

- Define Your Core Problem: Are you primarily focused on debugging complex chains, optimizing prompt performance, reducing latency and cost, or ensuring AI safety and compliance? Your primary pain point will immediately narrow the field. For instance, if prompt optimization is your north star, tools like Promptfoo should be at the top of your list.

- Evaluate Your Tech Stack: Consider your existing infrastructure. Do you need a platform that is framework-agnostic, or are you heavily invested in a specific library like LangChain or LlamaIndex? LangSmith is a clear winner for LangChain users, while Portkey offers broad compatibility.

- Assess Your Team’s Scale and Expertise: A small team or startup might prioritize ease of setup and a generous free tier, making tools like Langfuse or the open-source Traceloop highly attractive. Larger organizations may need advanced collaboration features, role-based access control, and dedicated support.

- Run a Pilot Project (POC): Never underestimate the power of a proof of concept. Before migrating your entire production workload, select a small, non-critical project to test your top two or three choices. This hands-on experience will reveal more than any feature comparison chart ever could. Pay close attention to the ease of integration, the intuitiveness of the user interface, and the quality of the documentation.

Ultimately, the goal of these PromptWatch alternatives is to transform your AI development process from a black box into a transparent, data-driven operation. By implementing the right observability and prompt management tool, you empower your team to build better, more reliable AI products faster.

Frequently Asked Questions (FAQ)

What is the most important feature to look for in a PromptWatch alternative? While this depends on your specific needs, the most critical feature for most teams is detailed tracing and logging. The ability to see the complete lifecycle of a request, including all inputs, outputs, latency, token usage, and any errors, is the foundational layer upon which all other features (like evaluation, debugging, and cost analysis) are built.

Are open-source alternatives like Langfuse or Traceloop ready for production use? Yes, many open-source tools are production-ready and used by major companies. They offer the significant advantages of self-hosting (for data privacy and control), customizability, and a strong community. The main trade-off is that you are responsible for setup, maintenance, and scaling the infrastructure, whereas managed SaaS solutions handle this for you.

How much should I expect to pay for a prompt observability tool? Pricing models vary widely. Many tools offer a generous free tier for individuals or small projects, typically with usage limits (e.g., a certain number of traces per month). Paid plans often scale based on usage (traces, events, or tokens processed), features, and the number of team seats. Expect to pay anywhere from $20 per month for a startup plan to thousands for an enterprise license with dedicated support.

Ready to gain unparalleled visibility into your brand’s presence in AI-generated search results? While the tools above excel at technical observability, Airefs focuses on a critical business outcome: ensuring your brand gets recommended by AI chatbots like ChatGPT and Perplexity. See exactly which AI models mention your brand, track your share of voice in AI search, and optimize your content to become a trusted source for generative AI.

Start tracking your AI search visibility today with Airefs.